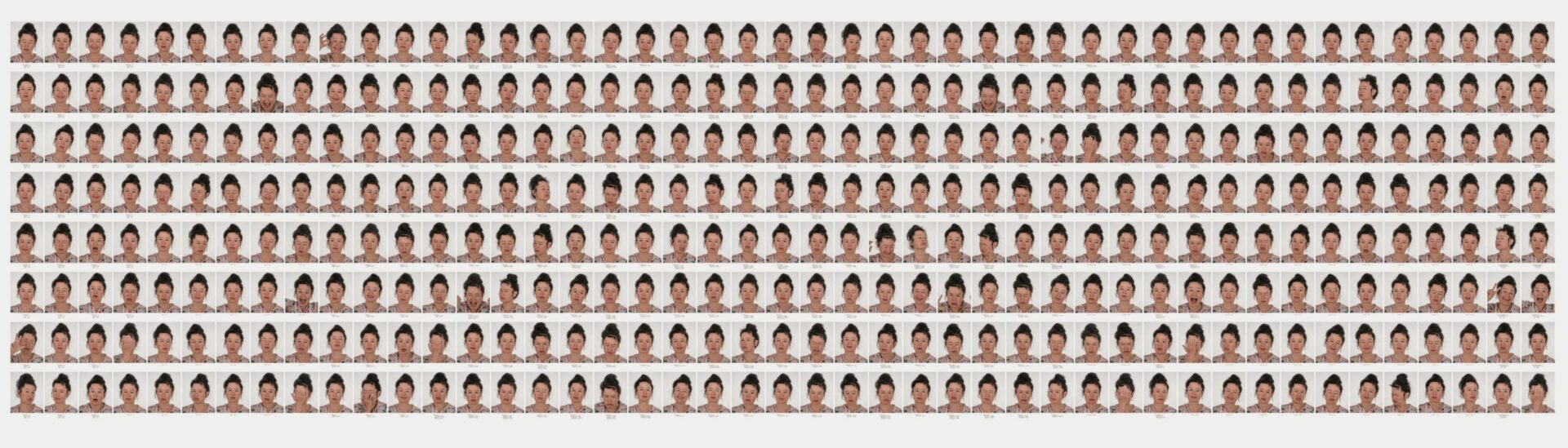

Photo : permission de | courtesy of the artist & Metro Pictures, New York

The Automation of Empathy

Much of the algorithmic output that Paglen includes is, in one way or another, questionable. In one image, the algorithm identifies Steyerl as 59.58 percent likely to be male and 40.42 percent likely to be female. This interpretation of Steyerl’s face shows us how these algorithms identify only probabilities within rigid categories, whereas a great deal of research on gender tells us of its fluidity and most certainly does not establish a definitive link between facial expression and gender. In other images, what would appear to a human eye as similar photographs are classified as representing completely different emotional states. Steyerl rolling her eyes back into her head, the algorithm tells us, expresses something between the states of “neutral” and “sadness.” But these seemingly incorrect identifications aren’t the point. Instead, Paglen is showing us that computational systems “see” in radically different ways than humans do. Each image in Machine Readable Hito is, effectively, a double representation. One is the visual representation of a face that can be understood and interpreted by human capacities of vision. The other is the representation — oblique, indirect, via text — of what a computer sees when it classifies a digital image. The second series of representations are the important ones here, imbued as they are with an assumed objectivity, if belied by the judgments at which the AI programs arrive.

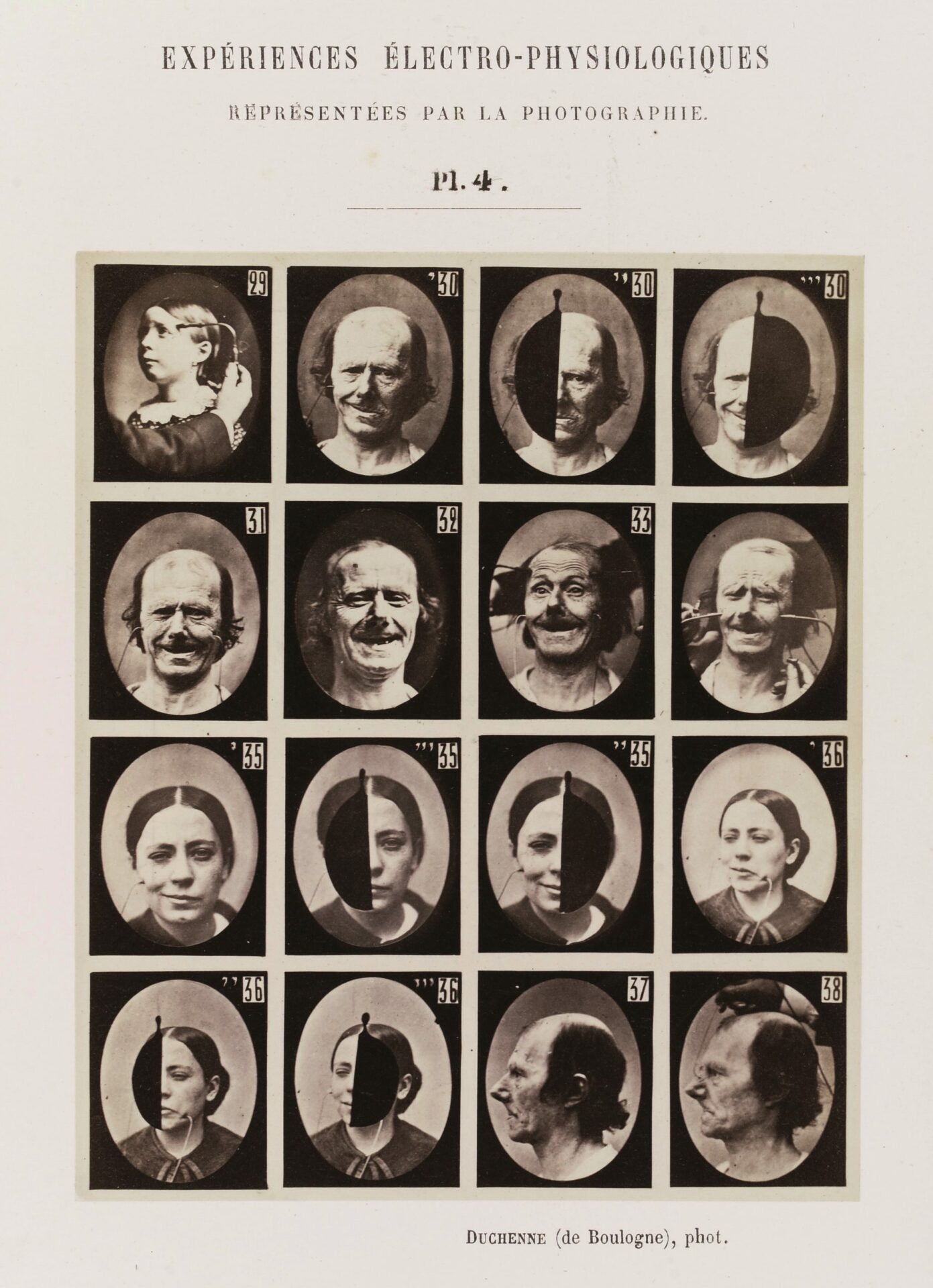

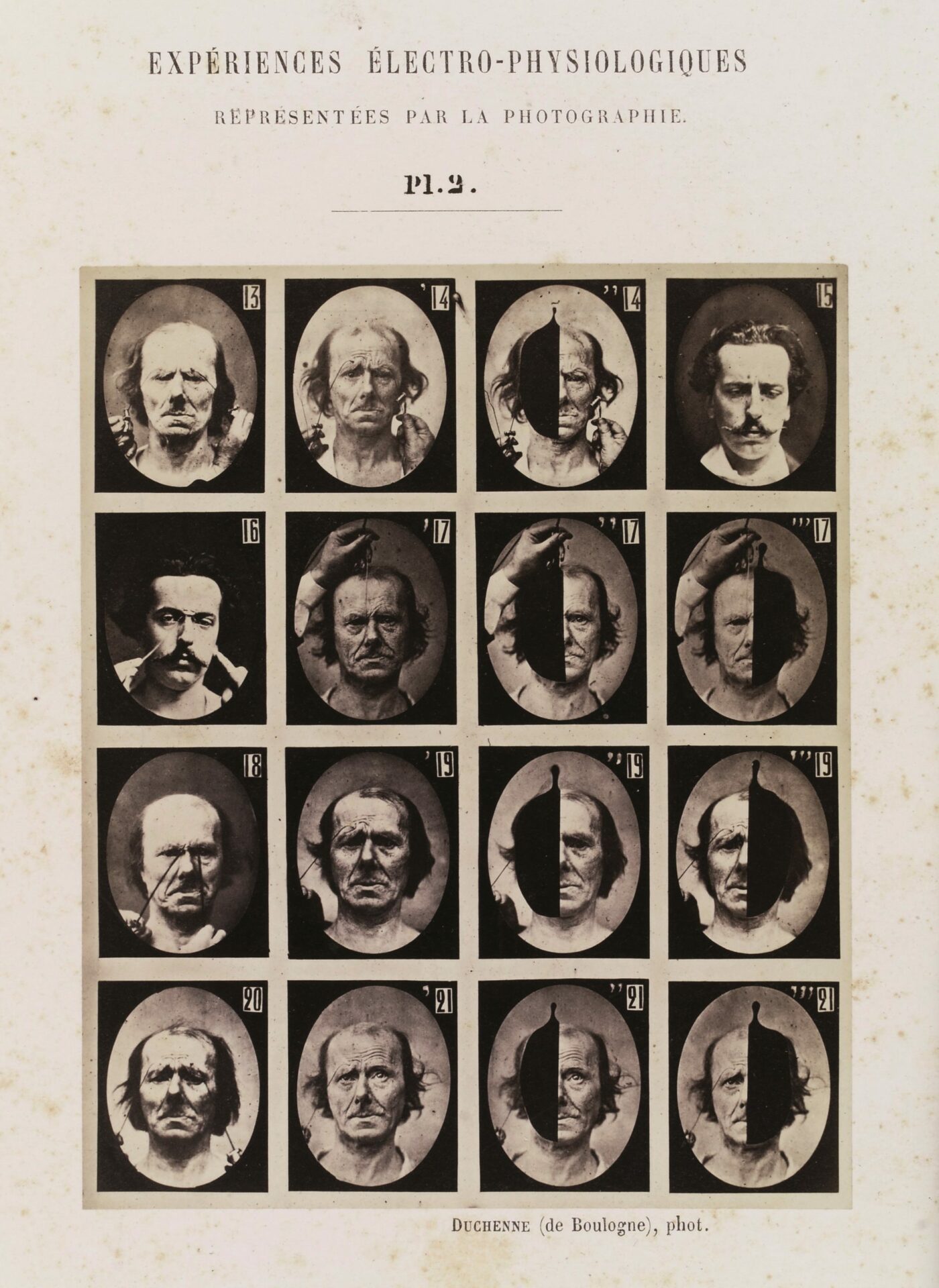

Experiments in physiology. Facial expressions.

Photos : Wellcome Collection

These technological systems cannot be challenged by dismissing their interpretations as incorrect. “The invisible world of images isn’t simply an alternative taxonomy of visuality,” Paglen argues. “It is an active, cunning, exercise of power, one ideally suited to molecular police and market operations — one designed to insert its tendrils into ever-smaller slices of everyday life.”1 1 - Trevor Paglen, “Invisible Images (Your Pictures Are Looking at You),” The New Inquiry, December 8, 2016, https://thenewinquiry.com/invisible-images-your-pictures-are-looking-at-you/. That computer vision may not classify faces accurately (at least, according to human perception) does little to change how these algorithms are used by social media monopolies, governments, and law enforcement in the service of an impersonal and automatic perpetuation of discrimination built into technology.2 2 - Cf. Safiya Umoja Noble, Algorithms of Oppression: How Search Engines Reinforce Racism (New York: NYU Press, 2018).These automated systems of vision are tasked with the generation of objective truth, as if their indifference to and differentiation from human perception performs their objectivity.3 3 - For an elaboration of an argument similar to this, see my book Inhuman Networks: Social Media and the Archaeology of Connection (New York: Bloomsbury, 2016).

Paglen’s concern is with the military and the police, and with how the visual culture of digital media, so pervasively utilized by these agencies of control, has been divorced from human systems of vision — a concern similar to that of other artists who engage with digital media, such as James Bridle, Adam Harvey, Zach Blas, and Steyerl herself. But the technologies for emotion recognition that Paglen uses in Machine Readable Hito also point us to how surveillance is bound up with something that may seem completely beyond the politics of state control — the psychology of empathy. It’s this link between the automation of vision and empathy that I want to highlight, and this requires that I look at the history of facial emotion in psychology and how this history relates to current psychological research on empathy.

Although a work such as Machine Readable Hito speaks to the regime of visuality defined by computational algorithms and how this regime relates to surveillance, it also speaks to how — at a time when empathy seems to be in crisis — computational systems that overlap with police control are also used to modulate and manage the possibilities for empathetic understanding.

Almost all of the emotions identified by machine-vision algorithms are derived from the work of psychologists Paul Ekman and Wallace Friesen — specifically, their Facial Action Coding System. FACS, as it is known, which classifies the face into the movements of individual muscles, called “action units,” and then identifies specific arrangements of action units to determine, from facial expression alone, what someone is feeling or whether or not someone is lying. FACS has long been foundational for technological systems of emotion detection4 4 - Kelly Gates, Our Biometric Future: Facial Recognition Technology and the Culture of Surveillance (New York: NYU Press, 2011). and has been instrumental in perpetuating a questionable model that suggests a range of emotions that is experienced by everyone, interpreted universally, displayed equivalently on the faces of all members of humanity.5 5 - For a thorough critique of this model of emotion, see Ruth Leys, The Ascent of Affect: Genealogy and Critique (Chicago: University of Chicago Press, 2017). These emotions usually include anger, disgust, fear, happiness, sadness, and surprise, which — because of the link between computational systems for recognizing and representing facial emotion — are the same emotions in systems like Microsoft’s.

The emotions that FACS categorizes — and that are algorithmically identified on Hito Steyerl’s face — can be traced back to a textbook by psychologist Robert S. Woodworth, Experimental Psychology, originally published in 1938. Woodworth reviewed a number of studies on the facial expression of emotion and, in repeating studies for quantifiable verifiability, reduced all facial expressions to six categories that may seem to refer to vastly different experiences: Love (which was also Happiness and Mirth), Surprise, Fear (which was also Suffering), Anger (which was also Determination), Disgust, and Contempt. These six categories were chosen because they satisfied requirements for quantitative verifiability, and little else.6 6 - My comments here are based on the revised version of this textbook that Robert S. Woodworth coauthored with Harold Schlosberg, Experimental Psychology, rev. ed. (London: Methuen & Co. Ltd., 1954), 112 — 19.

Psychologists such as Woodworth were primarily concerned with an observer’s ability to judge posed emotions correctly, following some claims by Darwin (who relied on the photographs of Duchenne de Boulogne) suggesting that facial expressions are evolutionary mechanisms that allow us to understand the experience of another via external appearance.7 7 - Charles Darwin, The Expression of the Emotions in Man and Animals (New York: D. Appleton and Company, 1897). Sets of images (usually from Germany) created to train artists to accurately represent the passions portrayed by the human face were imported into American psychology to be used as material for experiments that asked if people could accurately determine the emotion signified by a particular facial expression.

Machine Readable Hito, 2017.

Photo : permission de | courtesy of the artist & Metro Pictures, New York

Subjects in these experiments rarely agreed on the interpretation of a particular facial expression. But, repeatedly, these psychologists assumed that the inability of observers to agree on emotions represented in photographs was a problem for the observers, not of the photographs, not of the simulation of emotion in the photographs. This follows from a broader cultural shift that occurred in the late 1800s and early 1900s regarding how photographs were understood as evidence.8 8 - Joel Snyder, “Res Ipsa Loquitur,” in Things that Talk: Object Lessons from Art and Science, ed. Lorraine Daston (New York: Zone Books, 2008), 195 — 221. Yet disagreement among observers over interpreting and identifying emotion persisted until subjects were forced into choosing from a limited set of possible affects to classify the expressions that images represented — the limited choices identified by Woodworth in Experimental Psychology. The computational analysis of facial expression further removes these problems of subjective judgment. As Paglen suggests, it’s the algorithmic classifications of Machine Readable Hito that possess the force of objectivity, not the visual image.

The psychology of emotion was derived from a particular use of photography, one that suggested that standard categories for grouping photographs would reveal how visual interpretations of faces allowed observers access to the emotional states of others. This is, perhaps, one way to define what empathy is. Empathy did not originate as a psychological construct. Rather, it was imported into psychology via German aesthetic theory from the late 1800s and early 1900s and its discussion of Einfühlung, developed to describe a kind of mirroring or “feeling-into” that one experienced when looking at a particularly moving work of art — or another human. As Einfühlung evolved into the psychological concept of empathy, it expanded to describe all human relations, though — in part because of challenges within psychology, within philosophy, and within aesthetic debates that opposed naturalistic “feeling-into” with modernist alienation — empathy was often sidelined as a construct until around the 1970s.9 9 - Grant Bollmer, “Gaming Formalism and the Aesthetics of Empathy,” Leonardo Electronic Almanac (forthcoming). Although empathy is a difficult concept to properly discuss, as it has so many subtly different definitions, since the 1970s, it has been used to describe a set of psychological disorders defined by an inability to correlate facial expression with the neurological means for experiencing specific emotional states. Autism, psychopathy, and borderline personality disorder — the three major psychological conditions defined in this way — are all thought to be produced by some disruption in the ability to see another’s facial expression and either mirror it or “know” in one’s mind what that expression signifies, internally.10 10 - Grant Bollmer, “Pathologies of Affect: The ‘New Wounded’ and the Politics of Ontology,” Cultural Studies 28, no. 2 (2014): 298 — 326. Elsewhere, empathy has been linked to the assumed existence of “mirror neurons” that permit the brain to mirror the emotional states of others — in spite of the fact that mirror neurons, while they exist in macaque monkeys, have not been proved to exist as part of the human brain. Regardless, empathy is linked to a psychological mirroring of another, a mirroring articulated most explicitly with the ability to read the expressions on the face of another.

Machine Readable Hito demonstrates how this history of psychology and the assumptions that it makes about vision, emotion, and empathetic relation have become part of the visual culture of digital media — subject, like other forms of surveillance, to the automated mechanisms of computers. Even disregarding the massive amount of debate surrounding the basic categories of emotion and empathy as operationalized by psychology, works such as Paglen’s ask us to question the “objectivity” and “rationality” of how scientific categories for human relations are built into digital media and automated. And, beyond overtly coercive powers of control, it asks us to question how the automation of emotion, the automation of empathy, will be put into service of the police, the military, and social media monopolies, all of which use computer vision to extract information from images of the face, automating a particular understanding of mirroring, emotion, and empathy in digital culture. It turns us to a renewed phrenology or physiognomy, one used to control by means of “modulating affect.”11 11 - See Mark Andrejevic, Infoglut: How Too Much Information Is Changing the Way We Think and Know (New York: Routledge, 2013), 52 — 54, 77 — 110. What Machine Readable Hito shows, above all, is how technological means for fostering empathy, which tend to defer to algorithmic systems of identifying emotion on the face, may have little to do with human experience and perception, but involve a rationalized system for rigidly ordering visual knowledge.

Traduit de l’anglais par Margot Lacroix